Many of our customers have chosen Eclipse CDT as their tooling for developing C/C++ projects and they frequently complain about the poor runtime of the CDT indexer. I would claim that we (Java developers) are quite spoiled about the excellent performance of the Java tooling in Eclipse.

A quick note about this article: it explains a cache that was contributed to CDT 9.3.2 in ticket 514708 as part of Eclipse Oxygen in 2017.

Indexing a large Java workspace at startup may take several seconds, for really large projects maybe a few minutes, but afterwards it rarely blocks the sophisticated Java tooling. Be it large code imports or refactorings, Eclipse Java tooling handles that very well. But when developing a large C project, indexing may take several minutes, maybe even half an hour or more, depending on the available memory and CPU of course. This was the trigger for us to put some effort into analyzing the hotspots of the CDT indexer with the goal to improve performance.

To make a long story short, an additional cache of internal string handling may speed up indexing time of certain projects by nearly 40%!

Factors that Influence Indexer Performance

First of all, let’s investigate the different factors that influence the indexer performance:

- Java heap size that is available to the indexer for a cache:

If the internally used memory for building and managing the index is too low, parts of the index is stored on the hard drive and we all know that writing data to and reading it from a hard drive is much more expensive than accessing it from main memory.

The CDT indexer has a preference option to allow it to consume up to 50% of the available heap size for an internal cache. - Structure of the C/C++ source files:

If the sources are organized in a flat hierarchy, i.e. with few includes, and if it does not contain thousands of macro definitions, the indexer is much faster than sources with a high degree of includes and with plenty of macro definitions. - Hardware resources:

The faster the CPU / hard drive / memory, the faster the indexer – obviously ;-)

We don’t have much influence on the latter two factors, but at least we can configure Eclipse and the CDT indexer to have enough memory available (e.g. 1 GB). But still, performance is far from acceptable (some customers even reported times of 45 minutes and longer).

So we took one of our customer’s projects, an AUTOSAR project, which had an indexing time of slightly more than 10 minutes on my machine, and used the YourKit Java Profiler to search for some hotspots:

- observation: during 1 minute, the indexer was creating more than 4 billion (!) objects of type

ShortStringandLongString. - observation: most calculation time is spent for comparing large char arrays (e.g. length > 70,000) which are represented by long strings.

To tackle these two issues, we first need to understand the actual problem:

- Why are so many objects created (and garbage collected just as fast)?

- What are these long strings?

- Why is it necessary to compare them?

- How can we improve string handling and comparison?

Code Structure Matters!

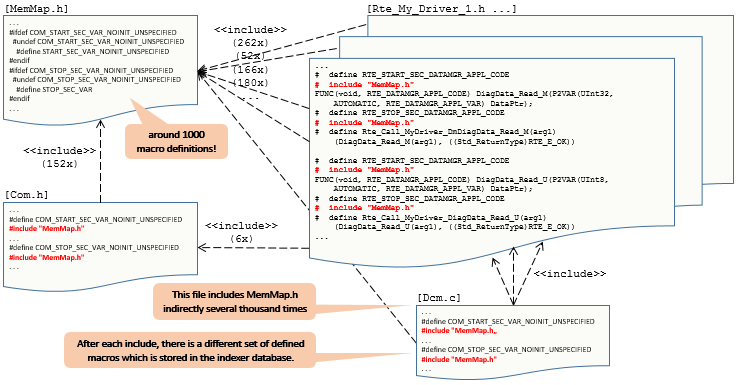

When looking into these long strings that are frequently compared, it turns out that they consist of plenty of macro definitions concatenated in alphabetical order. It seems that they are calculated for particular locations in the source files for fast lookup. (To be honest, I did not (yet) fully understand how the indexer is working in detail, e.g. why it needs to store so many long but very similar strings – so please correct me if the conclusions to my observations are not correct.) We found the reason for these incredible long list of macro definitions inside the code structure which is sketched below:

There is one large header file which contains more than 1,000 macro definitions (MemMap.h) and there are many other header files that include this file – even multiple times. New macro definitions and includes to MemMap.h do alternate, resulting in different sets of defined macros at each location. These macro definitions are stored by the indexer inside a data structure that is basically a super large char array. For adding and searching entries (e.g. the set of defined macros at a particular location), a binary tree data structure is used on top of that super large char array. Whenever a candidate string should be added or searched inside this binary tree, a visitor is used to compare existing entries with that candidate. The comparison must decide whether the candidate entry is smaller or larger than existing entries, which requires the strings to be compared character by character. For each entry to be compared, a new ShortString or LongString object is created which is a wrapper of the actual string with a java.lang.String-like API for comparison.

First Attempt: Reducing Object Creation

Our first guess was that the immense number of Short- and LongString objects may slow down the overall performance, so we reduced that as far as possible:

- Whenever a new string is stored inside the database, most of the time its location in the data structure (an index of the super large char array) is of interest – so we directly returned that index instead of creating (and immediately disposing) a Short-/

LongStringobject. - Whenever some particular string is requested via such an index (e.g. the represented char array or a

java.lang.Stringinstance), we directly retrieved that data instead of creating (and immediately disposing) aShort-/LongStringobject. - We only left the

Short-/LongStringobjects when they are needed for comparisons.

Although there are much less objects created, the indexer run time was not affected at all. It seems as if the Java compiler and the VM do quite some optimizations so that the overhead of creating wrapper objects does not really affect performance.

Second Attempt: Optimizing Comparison of Char Arrays

Whenever two LongString instances are compared (i.e. determining their lexicographical order), ShortString.compareChars(..) is called. This looks odd. For understanding how and why this could be optimized, we must first understand the difference between a ShortString and a LongString.

The database (the super large char array) is divided into chunks of 4096 chars. Whenever a string should be stored inside the database, one or more chunks must be allocated. If the string to be stored is larger than the available space inside one chunk, it must be split over multiple chunks.

- A

ShortStringis shorter than the size of a chunk, so it fits into a single chunk. - A

LongStringis larger than the size of a single chunk, so it must be split and distributed over multiple chunks.

A pointer to the beginning of the stored string is enough to represent the location and to retrieve it again when needed (the last entry in a chunk is a pointer to the next chunk).

The costly comparison in our AUTOSAR project compares strings with length > 70,000, so it compares strings that are stored in ⌈70,000 / 4,096⌉ = 18 chunks. The current implementation loads the entire char array of full length for both strings to be compared, and compares them character by character, even if they already differ in a character contained in one of the first chunks. Our idea is a new compare logic for long strings, LongString.compare(..), which loads and compares characters chunk by chunk to decide their lexicographical order. This implementation is listed in bug 515856.

With this optimization, indexer performance is in fact increased for our AUTOSAR project: instead of 680 seconds, the indexer finished in 640 seconds. This is an improvement of roughly 6% with no additional memory consumption.

Third Attempt: Caching Char Arrays

The previous two attempts already explained that Short-/LongString instances are always newly created for each access of strings stored inside the super large char array. Moreover, each access on the actual string (represented by a char array stored in one or more chunks), (re-)retrieves the desired char array from the chunks, e.g. for comparing it against another string. The indexer has already a cache built-in which holds recently accessed chunks in memory until the indexer cache size limit is hit. If a requested chunk is not inside the cache, it must be loaded from the super large char array. However, the actual char array for comparison is still reconstructed inside the Short-/LongString classes on each access.

Instead of caching chunks, we tried to cache the actual Short-/LongString objects. Moreover, instead of having a fixed cache size, we use Java references to let the garbage collector free some memory when needed. So whenever a new Short-/LongString is retrieved from the database and constructed from its chunks, it is stored by its long pointer in a map of references. Another access of the same string first checks whether the string is still contained in that map. If so, we can directly return it; if not, we need to re-construct the string from the chunks and re-add it into the map. It was a bit tricky to let the garbage collector free the memory for both, the string value and the key (the long pointer), but with a negligible overhead, it is possible. This implementation is listed in bug 514708.

The resulting indexer performance in our AUTOSAR project looks promising: instead of 680 seconds, the indexer finished in 430 seconds. This is an improvement of roughly 37%!

Improved performance is typically a tradeoff between runtime and memory consumption. The above-mentioned patch uses weak references which are garbage collected as soon as heap size becomes sparse. Consequently, the newly introduced cache takes additional memory if available and the garbage collector takes care of cleaning it up when needed.

Benchmarking

To quantify the performance improvements, we ran some benchmark tests for the latter two attempts. We tried several variants on the string cache to reduce memory consumption with the cost of slightly more code complexity. As test projects, we used the already mentioned AUTOSAR project as well as the php open source project, which has a totally different source code structure. Each project was indexed between 11 and 20 times with an indexer cache between 32 and 1024 MB (which did not noticeably affect performance). The results are shown in the table below. We also tried several other open source projects but failed to produce reasonable benchmark results: either the indexing time differed by just 1-2 seconds for a total indexing time between 15 and 45 seconds (samba, vlc, ffmpeg), or the indexer aborted because of too many errors (linux kernel, gcc). All results are listed here.

|

Project |

AUTOSAR [20 runs] |

PHP [11 runs] |

||||

|

Original runtime in seconds |

576 |

545 |

667 |

54 |

51 |

59 |

|

LongString.compare (Second Attempt) |

509 |

485 |

571 |

55 |

50 |

60 |

|

Weak (1) String Cache (Third Attempt) [ see explanation for note (1) below ] |

370 |

342 |

419 |

56 |

51 |

64 |

|

Soft (1) String Cache (Third Attempt) |

366 |

337 |

410 |

54 |

49 |

61 |

|

LongString.compare & Weak String Cache |

441 |

422 |

503 |

56 |

53 |

60 |

|

LongString.compare & Soft String Cache |

389 |

347 |

432 |

54 |

49 |

59 |

|

Weak String Cache with disposing keys (2) |

371 |

342 |

421 |

55 |

51 |

59 |

|

Soft String Cache with disposing keys |

365 |

336 |

406 |

56 |

51 |

61 |

|

Weak String Cache with hard cache (3) |

380 |

338 |

449 |

56 |

52 |

59 |

|

Soft String Cache with hard cache |

374 |

337 |

442 |

56 |

51 |

60 |

|

Weak String Cache with hard cache |

370 |

340 |

420 |

55 |

49 |

63 |

|

Soft String Cache with hard cache |

369 |

335 |

413 |

55 |

51 |

61 |

The first row is the original indexer run time. The subsequent rows show the result of different adjustments of the second and third attempt with several combinations and adjustments of the string cache. Unfortunately, the benchmark results of the php project vary a lot so that there is no clear improvement for any of the listed attempts. There seems to be some other factor that makes the indexer runtime vary. At least the different attempts do not observably slow down the indexer runtime.

As you can see in the highlighted cells for the AUTOSAR project, the string cache may speed up indexing time by up to 39%! The LongString-specific compare implementation, on the other hand, only results in a speedup of 6-18%. Quite interesting is that a combination of the second and third attempt performs worse than the third attempt alone, but I don’t have any reasonable explanation for this.

Some further remarks to the variants we used for benchmarking:

(1) Ethan Nicholas says that “softly reachable objects are generally retained as long as memory is in plentiful supply. This makes them an excellent foundation for a cache”. The results confirm this citation.

(2) With a ReferenceQueue, the disposal of strings also disposes their keys in the map, so the garbage collector is capable of entirely wiping the string cache if necessary. Fortunately, this overhead does not have significant impact on performance – it even performs better than without key disposal.

(3) If memory gets sparse, weak and soft references are disposed by the garbage collector. But what if the most recently used strings would remain in memory anyhow? We used a hard cache to test this setting. Unfortunately, this does not yield any better performance. So we can omit this overhead.

Some words about additional memory consumption by the cache keys:

The life cycle of the string cache is bound to the life cycle of the indexer for a particular project. So the entire cache is discarded as soon as the indexer is discarded for a project. During the indexing task, however, the cache will be filled with keys (long pointers) to the cached strings which are wrapped in weak or soft references. So whenever the heap size becomes sparse, the garbage collector may dispose the cached strings (which happened between 2 and 90 times in the benchmarks above). When the indexer was done with the AUTOSAR project, the map had around 60.000 keys and only 2.000 cached strings, the rest was garbage collected. Without disposing the keys, memory consumption for these keys is 60.000 * 8 Byte (long value) = 480 KB, which is less than 1/500 of the default indexer cache size. IMHO, this temporary extra memory is more than tolerable for the achieved performance boost. Fortunately, the extra logic for disposing keys via a ReferenceQueue does not affect the performance at all, so it does not hurt to clean that up, too :-)

By the way, the modifications with the best results are provided in bug 514708.

Join the Discussion!

For code with less includes and less macro definitions, the exact same problems may not arise, but maybe the string lookup inside the binary tree is still the bottleneck. Please leave some comments whether you have similar or maybe other observations. Do you have further ideas or experiences how to improve performance?

Comments